The launch of ChatGPT in late 2022 marked a milestone second for synthetic intelligence, bringing what was as soon as a distinct segment expertise firmly into the mainstream. Out of the blue, AI and particularly Generative AI, wasn’t simply the prerogative of knowledge scientists and tech builders. It was a function in on a regular basis conversations, a presence in enterprise methods, and a catalyst for innovation throughout industries. Within the months since, platforms like Gemini and Perplexity have emerged, pushing the boundaries of what AI can obtain whereas increasing its position within the office.

The launch of ChatGPT in late 2022 marked a milestone second for synthetic intelligence, bringing what was as soon as a distinct segment expertise firmly into the mainstream. Out of the blue, AI and particularly Generative AI, wasn’t simply the prerogative of knowledge scientists and tech builders. It was a function in on a regular basis conversations, a presence in enterprise methods, and a catalyst for innovation throughout industries. Within the months since, platforms like Gemini and Perplexity have emerged, pushing the boundaries of what AI can obtain whereas increasing its position within the office.

For these of us targeted on delivering distinctive worker experiences and enabling smarter methods of working, AI gives thrilling alternatives. But, alongside its guarantees come important challenges. If we’re not considerate in how we method this expertise, AI might exacerbate inequalities, entrench biases, and erode belief, fairly than improve effectivity, drive innovation, and assist us construct fairer, extra inclusive workplaces.

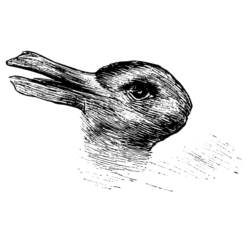

To know the stakes, we have to begin by demystifying what AI is and what it isn’t. AI refers to laptop techniques designed to carry out duties that usually require human intelligence, corresponding to reasoning, studying, and problem-solving. However the time period is commonly used loosely, lumping collectively every thing from easy automation instruments to techniques able to “studying on the job” and delivering on their goals in essentially the most environment friendly approach.

Throwing the time period AI at something vaguely techy has muddied the waters. This lazy labelling fuels each overhype and over-trust. Simply because it’s bought ‘intelligence’ within the title doesn’t imply it’s clever. And let’s be clear: intelligence is available in many shades. Among the cleverest folks on the planet are psychopaths. Plus, being “sensible” doesn’t at all times make you reliable, considerate, and even helpful.

This tendency to anthropomorphise AI makes it much more important to method it cautiously. It’s not a buddy or a foe. It’s a instrument. An excellent start line is psychologist Howard Gardner’s principle of a number of intelligences. Whereas AI excels in logical reasoning and information processing, it falls quick in areas like empathy, creativity, and interpersonal understanding – qualities that underpin efficient office administration. That is the place people, notably office professionals, are available.

Rubbish in, rubbish out

Giant Language Fashions (LLMs), like ChatGPT, can churn out polished reviews, spark concepts, and help with inside communications. At first look, they save time and enhance effectivity. However dig deeper, and their limitations change into clear. Their outputs rely solely on the information they’ve been skilled on – information that always carries biases, inaccuracies, or lacks context. Positive, an LLM can draft a quick office coverage, however it gained’t anticipate how that coverage would possibly land with a various workforce. It might write a powerful sustainability report, however it gained’t pause to query the authenticity of inexperienced claims.

The phrase “rubbish in, rubbish out” amongst AI thought leaders encapsulates the difficulty. If AI is fed flawed, biased, or inaccurate information, it is going to spit out equally flawed outcomes. That is the place people step in, not as dustmen, however as gardeners. We put together the soil (enter information), plant the seeds (algorithms and frameworks), and have a tendency the backyard (monitor and refine the system). Like gardening, managing AI requires care, foresight, and a dedication to eradicating weeds, whether or not they’re errors, biases, or irrelevant inputs.

Alternatively, consider people as curators, rigorously choosing and organising the information and selections that form AI outputs. Like museum curators selecting items of reality and high quality, we guarantee AI’s work displays accuracy, ethics, and relevance. Whether or not gardeners or curators, people stay chargeable for overseeing, refining, and aligning AI’s outputs with real-world values. And the place greatest to place that to the check than within the office.

Again to hybrid

AI’s position in office design and administration is a complementary one. It might improve human capabilities however can’t exchange the empathy, cultural understanding, and demanding pondering that organisations must thrive. Efficient management, worker engagement, and sustainability depend upon these qualities – qualities that AI, for all its energy, can’t replicate. But.

A hybrid method is the way in which ahead. AI ought to deal with the heavy lifting – information evaluation, sample recognition, and primary content material era – whereas people take the lead in interpretation, decision-making, creation, and moral oversight. This partnership balances effectivity with humanity, making certain workplaces evolve in a approach that continues to be inclusive, revolutionary, and sustainable.

Say “Aye” to AI

AI is right here to remain, and that’s a very good factor – if we use it correctly. It might assist us work smarter, obtain extra, and innovate in methods we’re solely starting to discover. However let’s not deal with it as a silver bullet. AI isn’t infallible, and its integration into office practices calls for each pleasure and warning. Transparency, accountability, and ongoing studying are important to make sure it delivers on its guarantees with out compromising the values that make workplaces thrive.

Our latest analysis reinforces this level. The CheatGPT? Generative Textual content AI Use within the UK’s PR and Communications Occupation report, carried out by Magenta Associates in partnership with the College of Sussex, highlights each the alternatives and challenges posed by AI. Whereas 80 p.c of content material writers use generative AI instruments to assist their day-to-day actions, most are doing so with out their managers’ information. And right here lies the difficulty: most organisations lack formal coaching or tips for accountable AI use. With out these guardrails, moral dilemmas, transparency gaps, and authorized uncertainties stay unaddressed.

The findings function a reminder: generative AI can improve creativity and effectivity, however solely when it’s managed responsibly. Formal coaching, clear moral requirements, and open dialogue are important. AI must be a instrument for progress, not an excuse for sloppy shortcuts or missteps.

The way forward for AI within the office isn’t about changing people – it’s about enhancing what we do greatest. It’s not sufficient to undertake the expertise; we should form its use thoughtfully, inclusively, and… intelligently.

For extra insights into the moral and operational challenges of AI, obtain Magenta’s newest white paper, CheatGPT? Generative Textual content AI Use within the UK’s PR and Communications Occupation.